Cloud costs are a growing challenge, and one of the core components of these costs is compute - most large organizations' AWS costs are dominated either directly or indirectly by EC2.

There's a certain complexity to EC2 costs that makes it hard to analyze the cost of its core components; you pay for an instance type that comes with varying quantities of vCPUs, RAM, GPUs, local SSD storage, network bandwidth - but all of these are aggregated into a single number, an hourly cost. With so many parameters at play, trying to discern the cost of a given vCPU out of that total hourly cost is challenging - but this is an increasingly important question in multitenant cloud environments, where organizations are interested in allocating the cost of compute resources to different workloads.

In this blog post we're going to dive into a deep analysis of the cost of CPUs in AWS.

Background and Fun Facts

AWS doesn't exactly sell CPUs, it sells virtual CPUs - or vCPUS. Beyond the obvious distinction of vCPUs being virtualized by a hypervisor, vCPUs also have the distinction of not necessarily representing an actual CPU core - in most x86 instance types, a vCPU represents a physical CPU's hyperthread. x86 hyperthreading allows a single physical CPU core to run two logical cores, so AWS is able to take e.g. a physical CPU with 48 cores and sell 96 vCPUs.

Info

Even when selling their bare metal instances, which allow customers to run workloads directly on the physical CPUs without a hypervisor underneath, the vernacular AWS uses is still vCPUs.

Now on to some AWS history - up until 2018, AWS exclusively offered Intel processors in their compute offerings. Starting from 2018 onwards, AWS started offering the alternative of AMD EPYC processors. Moreover, in 2018 AWS unveiled its own processor, designed in-house, called Graviton. In contrast to their Intel and AMD offerings, Graviton isn't an x86 processor - it is an ARM-based processor. From 2018 onwards, every new generation of relevant AWS instance families has offered a choice between Intel, AMD, and Graviton.

Info

You're probably familiar with another custom ARM offering released a couple of years after Graviton - Apple's M1. But these are both massively different engineering undertakings - both Graviton and M1 are not technically processors, they are SoCs - systems on a chip, which means that they're integrated circuits that contain many components, a processor is just one of them.

The processors in AWS's Graviton are based off of ARM Neoverse cores - AWS licenses Neoverse IPs from ARM (the company) and designs their own SoC around the processor - but the actual processor is not designed by AWS. Conversely, Apple only licenses the ARM instruction set - Apple designed their processors from the ground up.

AWS instance types have some funky-looking names - for instance m2.xlarge, c7g.xlarge,

m7a.metal-48xl, x2iezn.metal, inf1.xlarge. There is logic behind the funkiness - documented

in their

naming conventions -

but for our purposes we need to know:

- Names are split by a

.- the first part (e.g.c7g) details the instance family and type, and the second part (e.g.xlarge) details the instance size. - In most instance types, you should begin by looking at the first three letters, e.g.

c7gorr6i:- The first letter is the instance family - e.g.

csignifies compute-optimized instances,rsignifies memory (RAM)-optimized instances. - The number after the instance family signifies the generation of the offering - e.g.

c7is a 7th-generation compute offering. - The letter after the number is one of

a,i, org- representing AMD, Intel, and Graviton respectively. This letter only exists for offerings from 2018 onwards - previous generations didn't require it because only Intel was available. So for older generations you'll see justc4.xlargebut for newer generations you'll seec6i.xlarge,c6a.xlarge, andc6i.xlarge. Older instance types might have a different letter after the generation - e.g.r5d.xlarge- but don't be confused, this only details capabilities of the instance type (in this case, thedsuffix means that the instance type has a local SSD attached).

- The first letter is the instance family - e.g.

There's one last thing we need to know before moving forward - if an instance costs dollars, has vCPUs, and has GB of RAM - how can we derive the cost of a vCPU for this instance type? For now let's ignore anything else that might affect the pricing and assume that it's a function of vCPUs and RAM alone.

AWS's semi-official ratio of CPU-GB cost is vCPU-GB. This can be inferred indirectly from their AWS Fargate pricing, where a vCPU-hour costs nine times more than a GB-hour, and is explicitly documented as the calculation behind EKS/ECS split cost allocation in Cost and Usage Reports.

Let's say is the cost of a single vCPU, then the cost of a single GB of RAM is - and we can write . We can solve for to get . Or put differently:

The question of "the cost of a vCPU" is a function of the type of processor used in an instance. Moreover, there's the ever-present dimension in AWS of the region we're running from - the same instance type will have different costs in different regions. We expect that calculating the vCPU cost for two different instances in the same region should yield the same result if they have the same processor.

We'll be looking at three instance families - the c family (compute-optimized), the m

family (general purpose, supposedly because m stands for "most scenarios"), and the r

family (memory-optimized, r stands for RAM) - and we'll be focusing on instance types that

don't have any special abilities (e.g. we won't be looking at c6gn which has enhanced

networking capabilities). We'll be looking at these instance type families because they don't have

anything "extra" that affects the costs beyond the RAM and CPU. If we end up with the same vCPU

cost for a given processor across all three instance families, we can gain confidence that our

methodology is correct.

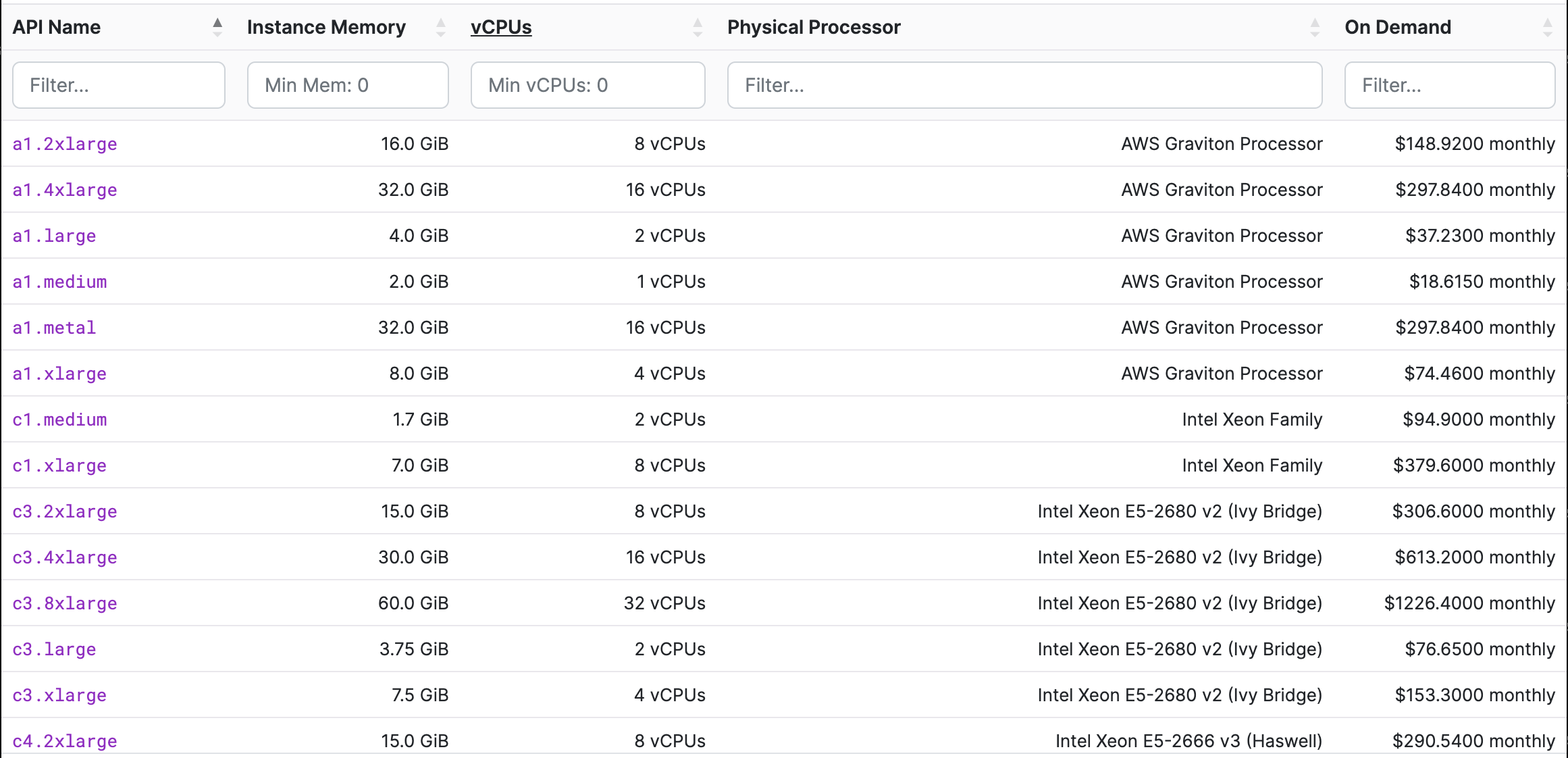

Our source of data will be Instances - which behind the scenes makes use of public AWS APIs - and we'll use its Export feature on each region. We'll choose monthly costs and narrow our selection down to just five columns - API Name, Instance Memory, vCPUs, Physical Processor, and the monthly On Demand cost. Here's an excerpt of what our data looks like:

Warning

We should note that we've chosen the most expensive EC2 charging model - on demand. There are cheaper charging models, such as reserved instances, spot instances, and saving plans - but we'll keep things simple for now.

Let's get started:

Processing the data

The script I wrote is toggled away for brevity:

The script fed a large table of the cost of processors in different regions. The full table is large and is again toggled away for brevity:

But below is the table narrowed down to us-east-1 as a baseline, sa-east-1 (São Paulo) which has the most expensive costs, and ap-southeast-2 (Sydney) which is somewhere in between.

| Processor | EC2 Generation | us-east-1 Monthly Cost | ap-southeast-2 Monthly Cost | sa-east-1 Monthly Cost |

|---|---|---|---|---|

| AMD EPYC 7571 | 5 | $21.73 | $27.29 | $34.87 |

| AMD EPYC 7R32 | 5 | $23.00 | $29.86 | $35.24 |

| AMD EPYC 7R13 Processor | 6 | $22.85 | $29.83 | $35.21 |

| AMD EPYC 9R14 Processor | 7 | $30.65 | - | - |

| AWS Graviton Processor | 1 | $15.23 | $19.89 | - |

| AWS Graviton2 Processor | 6 | $20.31 | $26.52 | $31.30 |

| AWS Graviton3 Processor | 7 | $21.65 | $28.18 | $33.17 |

| AWS Graviton4 Processor | 8 | $22.77 | - | - |

| Intel Xeon Family | 2 | $45.86 | $55.41 | $60.37 |

| Intel Xeon E5-2670 v2 (Ivy Bridge) | 3 | $32.85 | $39.42 | $69.11 |

| Intel Xeon E5-2670 v2 (Ivy Bridge/Sandy Bridge) | 3 | $34.27 | $47.99 | $49.02 |

| Intel Xeon E5-2680 v2 (Ivy Bridge) | 3 | $31.72 | $39.95 | $49.09 |

| Intel Xeon E5-2666 v3 (Haswell) | 4 | $30.06 | $39.42 | $46.67 |

| Intel Xeon E5-2676 v3 (Haswell) | 4 | $25.27 | $31.59 | $40.18 |

| Intel Xeon E5-2686 v4 (Broadwell) | 4 | $25.27 | $31.59 | $40.18 |

| Intel Xeon Platinum 8124M | 5 | $25.38 | $33.15 | $39.12 |

| Intel Xeon Platinum 8175 | 5 | $24.26 | $30.32 | $38.66 |

| Intel Xeon Platinum 8275L | 5 | $25.38 | $33.15 | $39.12 |

| Intel Xeon 8375C (Ice Lake) | 6 | $25.38 | $33.15 | $39.12 |

| Intel Xeon Scalable (Sapphire Rapids) | 7 | $26.65 | $34.81 | $41.08 |

The cost column is the average cost calculated for all the non-specialized r/m/c instance

types that have the given processor - as per the equation above. Not included in this table is the

variation of the calculated cost across instance types - most processors had no variation and the

maximum relative standard deviation was 9.97% (the average RSD was 1.09%). This gives us

confidence that our methodology is mostly faithful to AWS's cost model.

Warning

I excluded first-generation Intel-based instance types because those instances seem to follow a different pricing model - which makes sense because they're ancient. To AWS's credit they are still supported to this day.

How Profitable Are CPUs for AWS?

A natural question arises - how much money is AWS making off of this pricing model? This isn't really possible for us to accurately answer - expressing the profit margin is a function of so many parameters, such as the cost of the electricity that powers their data centers, the capex expenditures of those data centers, and the cost of AWS's R&D.

Nevertheless, we can do at least a little bit of conjecture for fun. We'll assume a simplistic world in which AWS has no expenditures beyond the initial price of the processor and derive how much AWS profits off of a given processor.

Let's focus on us-east-1 and take two latest-generation processors - Intel Xeon Scalable

(Sapphire Rapids) and AMD EPYC 9R14 Processor. We saw in our table above that these are priced at

$26.65 and $30.65 respectively.

Starting with Intel, by creating a c7i.xlarge instance - the instance that corresponds to the

processor name - we can see in /proc/cpuinfo that the exact Intel model is

Intel(R) Xeon(R) Platinum 8488C.

This model has 48 cores, giving 96 vCPUs for AWS to sell. At $26.65 per vCPU, AWS can make

$2,558.40 off of this processor in a single month. Let's assume that AWS will sell a given

processor for a minimum of five years - probably an underestimate - this means that each processor

should bring in $153,504.

The official price for the 8488C doesn't seem to be publicly available - but a slightly less powerful model (8458P) retails for $7,121 and a slightly more powerful model (8480+) sells for $10,710. Let's approximate by splitting the difference and price the 8488C at $8,915. At that price point it would take about three and a half months for AWS to break-even on the processor - and leave $146,383 in profit.

On to AMD. We know the exact AMD model - EPYC 9R14. This model has 96 cores, giving 192 vCPUs, and at $30.65 per vCPU, that's $5,760 a month - or $345,600 over five years. Again the price doesn't seem to be publicly available - I'm sensing a pattern - but the EPYC 9654P and the EPYC 9684X have an equivalent number of cores and are priced at $10,625 and $14,756. Let's again split the difference and approximate the price at $12,690. At that price point it would take about two months for AWS to break-even on the processor - and leave $332,910 in profit.

Note

As pointed out in a Reddit comment, this analysis is not accurate - EPYC 9R14 have 96 cores and 96 vCPUs! This cuts the above analysis down by half - $172,800 over five years, and so on.

I'll reiterate a warning from above - this analysis is based off of on-demand costs, which is the most expensive AWS pricing model. Most serious cloud workloads make use of reserved instances, spot instances, and savings plans - which bring these numbers down significantly.

Conclusions

This blog post is really only the tip of the iceberg as it relates to analyzing cloud vCPU costs. There's so much more to explore, and I hope to do so in future blog posts:

- An equivalent analysis in other cloud providers

- The "bang for buck" you get from a vCPU - measuring the cost of the vCPU against its performance

- Not all clouds are created equal - virtualization infrastructure differs significantly between cloud providers, and so you might pay for the exact same physical processor in different cloud providers but get different performance

Still, I hope this helped offer some insights into cloud economics and demystify the pricing model behind EC2 pricing.

We went through a lot of numbers, and you might want to walk away with just a single number you can keep in mind when thinking about vCPU costs. I want to give a shoutout to one of my favorite FinOps-related blog posts - Vantage's Cloud Costs Every Programmer Should Know. In that blog post they list the monthly cost of a single vCPU as $30 - we've seen that reality is substantially more nuanced, but this is a nice ballpark number to keep in mind.